How to use AI to query a customer database without writing SQL

You've finally made it to Data Analyst at your dream company (yay!). You're excited to finally put all those hardcore SQL skills you've acquired over the years, maybe even use some of the new and shiny dataframe frameworks. You get to your desk with a freshly brewed cup of coffee, ready to dive deep into the latest of the latest. But the top urgent requests are the usual, "Hey! Could you give me a list of our customers in New York who bought at least 10 avocados in the last year?" So you open your good ol' customers-filter.sql replace "Los Angeles" with "New York" and "Avocado" with "Bagel" in the query, and you attach that CSV file to Slack. This repetitive, mechanical (and frankly boring) process is very frustrating and time consuming. What if your non-technical colleagues could ask the database directly when they needed it?

What is a SQL Toolkit?

The SQL Toolkit enables natural language interactions with your database. Instead of writing complex queries, you simply describe what you need in plain English. The toolkit translates your request into a proper set of parameters and returns formatted results, preventing hallucinations by directly accessing your data.

Key features:

- Natural language database querying

- Properly formatted results

- Built-in data validation

Why Arcade Makes This Possible

We're using an Arcade tool-call to interact with your SQL database. Tool-calling means AI can take specific actions on your behalf, rather than just providing information. Instead of just suggesting SQL queries, the agent uses Arcade to directly interact with your database with proper authentication, the tool queries the database, and returns accurate results. This means you get real data, not AI hallucinations, while maintaining complete control over what the agent can access.

Getting Started

In this tutorial, we will build an Arcade toolkit that can interface with a database using natural language to generate and format results. At a high-level, we will provide the LLM agent with a function that knows how to correctly access the data to prevent hallucinations.For this we will:

- Create a new toolkit

- Write a query generation function for the LLM to use

- Build evals for tool-calls

- Invoke our new tool from the Playground

Setup

First, let's make sure we have the required software installed:

- Python 3.10+ & pip

We'll start by creating a directory and a virtual environment for our toolkit

mkdir arcade-sql-agent

cd arcade-sql-agent

python -m venv .venv

source .venv/bin/activateWe then add the required Python packages to the active environment.

pip install 'arcade-ai[evals]' python-dotenv psycopg2-binaryWe can now invoke the arcade command from our terminal, which we can use to create our new toolkit. But first, let's log in to arcade. If you don't have one, now's the time to create a new Arcade account. Then go to the terminal and run:

arcade loginYour browser will be opened and once you're done you should see a message in the terminal showing your Arcade API key.

Create a new toolkit

Now let's start to develop our toolkit from scratch. First, we'll use arcade to bootstrap a new toolkit project template:

arcade new

You'll be prompted to give the toolkit a name, a description, a GitHub username, and an e-mail.

I used these values:

Name of the new toolkit?: sql_customers

Description of the toolkit?: Query the customer database using natural language

Github owner username?: torresmateo

Author's email?: mateo@arcade.devYou will see that a sql_customers directory was created for you, and populated with a project template. It includes an organized skeleton for us to add our functions, tests, and evals, with utilities to install the toolkit locally to test it.

Here's what the directory looks like:

├── LICENSE

├── Makefile

├── README.md

├── arcade_sql_customers

│ ├── __init__.py

│ └── tools

│ ├── __init__.py

│ └── hello.py

├── codecov.yaml

├── evals

│ └── eval_sql_customers.py

├── pyproject.toml

├── tests

│ ├── __init__.py

│ └── test_sql_customers.py

└── tox.iniThe tools directory is where tool code goes, and by default we get hello.py, which includes a basic tool that takes a name and greets it. We'll replace this with our own tool.

Understanding database schema for tool calling

For simplicity, we'll use Supabase to host a database. Here's the schema of the table:

create table people

(

id INTEGER primary key,

name TEXT,

age INTEGER,

location TEXT,

occupation TEXT,

email TEXT

);We have prepared a downloadable version of this schema with 100 rows, that you can run to recreate the same database. If you want to follow along, please go ahead, create a Supabase account, and load the schema into a new database.

Write a query generation function for the LLM to use

Open the sql_customer directory in your code editor, and replace the hello.py file with a more descriptive query.py file under arcade_sql_customer/tools. We'll begin by importing the necessary packages, and configure a simple logger:

import sqlite3

from typing import Annotated, Optional

from arcade.sdk import tool, ToolContext

from arcade_sql_customers.utils import get_database_connection

# Configure the logger

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)If you're following along, please download the utils.py script from the repo, or better, clone the entire thing so it's easier to run!Let's begin by following the typical text-to-SQL approach, which asks the LLM to generate SQL directly from a natural language prompt:

@tool(requires_secrets=["database_url"])

def direct_query(

context: ToolContext,

query: Annotated[str, "The query to run in the database"]

) -> Annotated[dict, "The data returned from the database"]:

"""

Query the data from the 'people' table with a query generated by the LLM.

"""

logger.info("Starting query_customer_data function")

logger.debug(f"Query received: {query}")

# Connect to the database

conn = get_database_connection(context)

cursor = conn.cursor()

# Execute the query and get the rows

cursor.execute(query)

rows = cursor.fetchall()

logger.info(f"Query executed successfully. Rows fetched: {len(rows)}")

# Prepare the results as a list of dictionaries

column_names = [description[0] for description in cursor.description]

results = [dict(zip(column_names, row)) for row in rows]

logger.debug(f"Results prepared: {results}")

conn.close()

return {"results": results}See the full implementation on the repo for this tutorial: https://github.com/ArcadeAI/arcade-sql-agent-tutorial/blob/main/arcade_sql_customers/tools/query.py#L14

The @tool decorator above our definition instructs the Arcade worker to export that as a tool that the engine can make available to LLMs. The ToolContext we receive will allow us to get the proper user information to configure our tool. In this case, we use require_secrets to ensure we're getting the path to the SQLite database as a secret to the tool.

Let's deploy this tool and see how it works. We can deploy a cloud worker running the following commands (it may take many seconds the first time):

arcade deploy

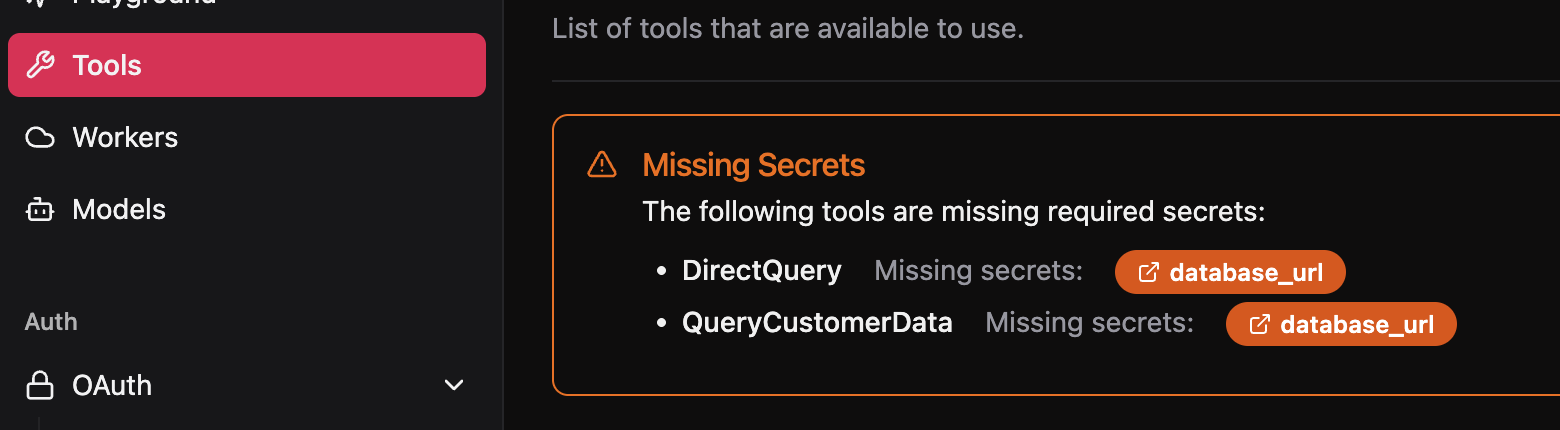

This will deploy a worker with the new tools on the cloud, and register it to our Arcade account. If we login now, we'll see our tools are added to the list in the "Tools" tab, and also a warning asking us to set the missing required secrets:

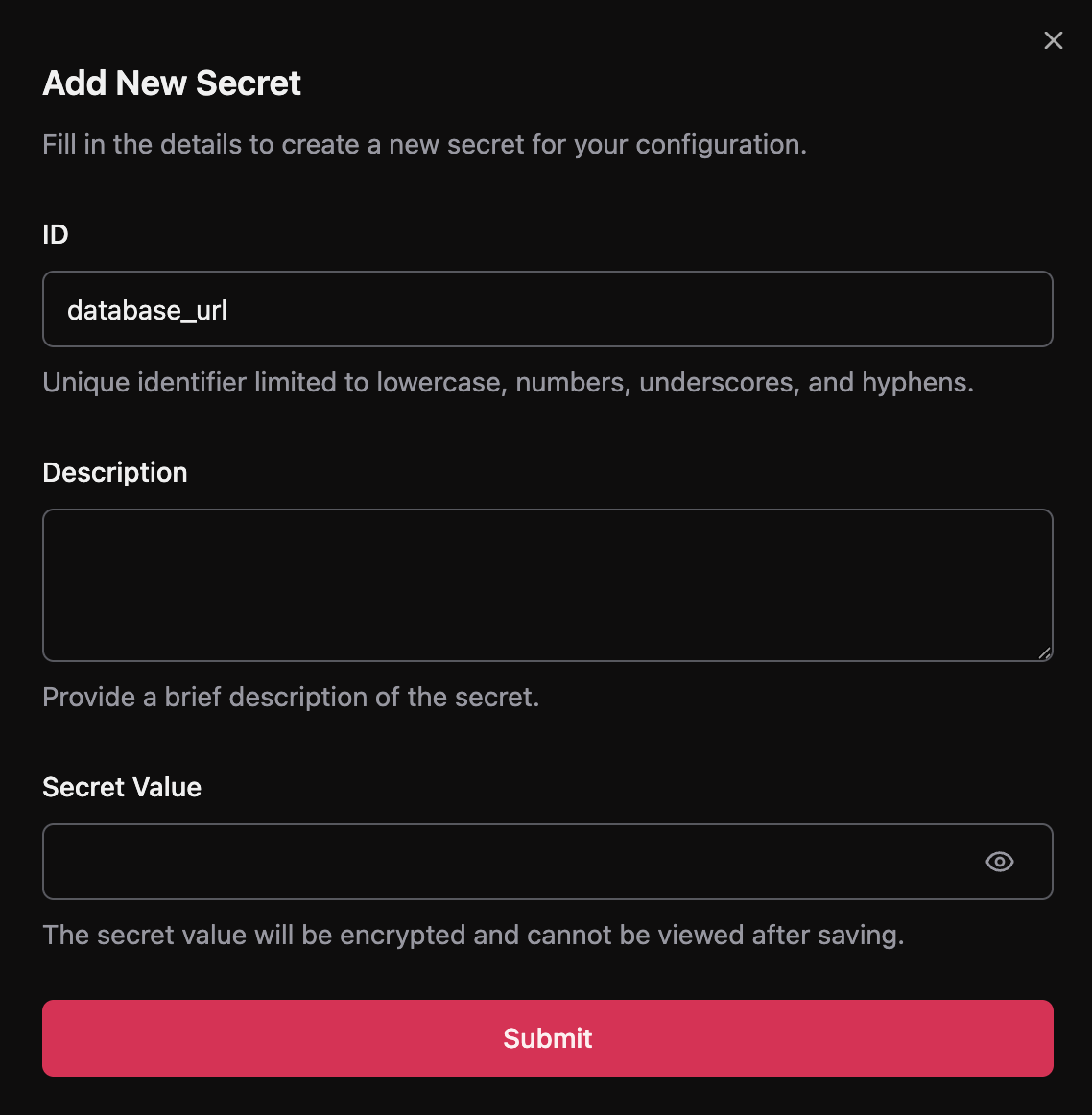

To configure the secret required by our tool, let's get the connection string from Supabase. Click on the orange link, and paste it into the "Secret Value" field.

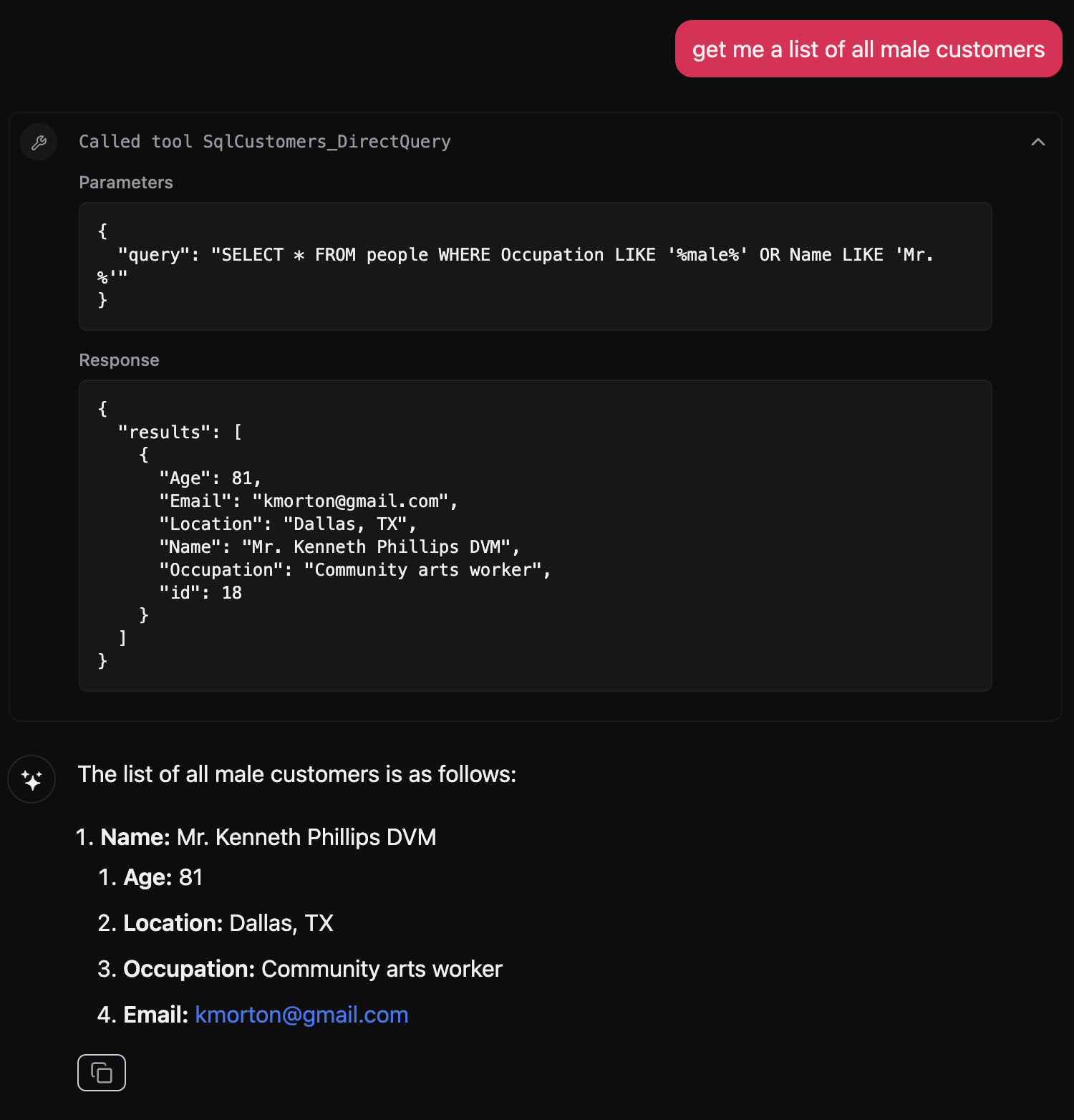

We can now test our direct_query tool. This function gets the LLM to generate a SQL query and the function will attempt to run it directly on the database. While this is useful in simple scenarios, it isn't guaranteed to work very time:

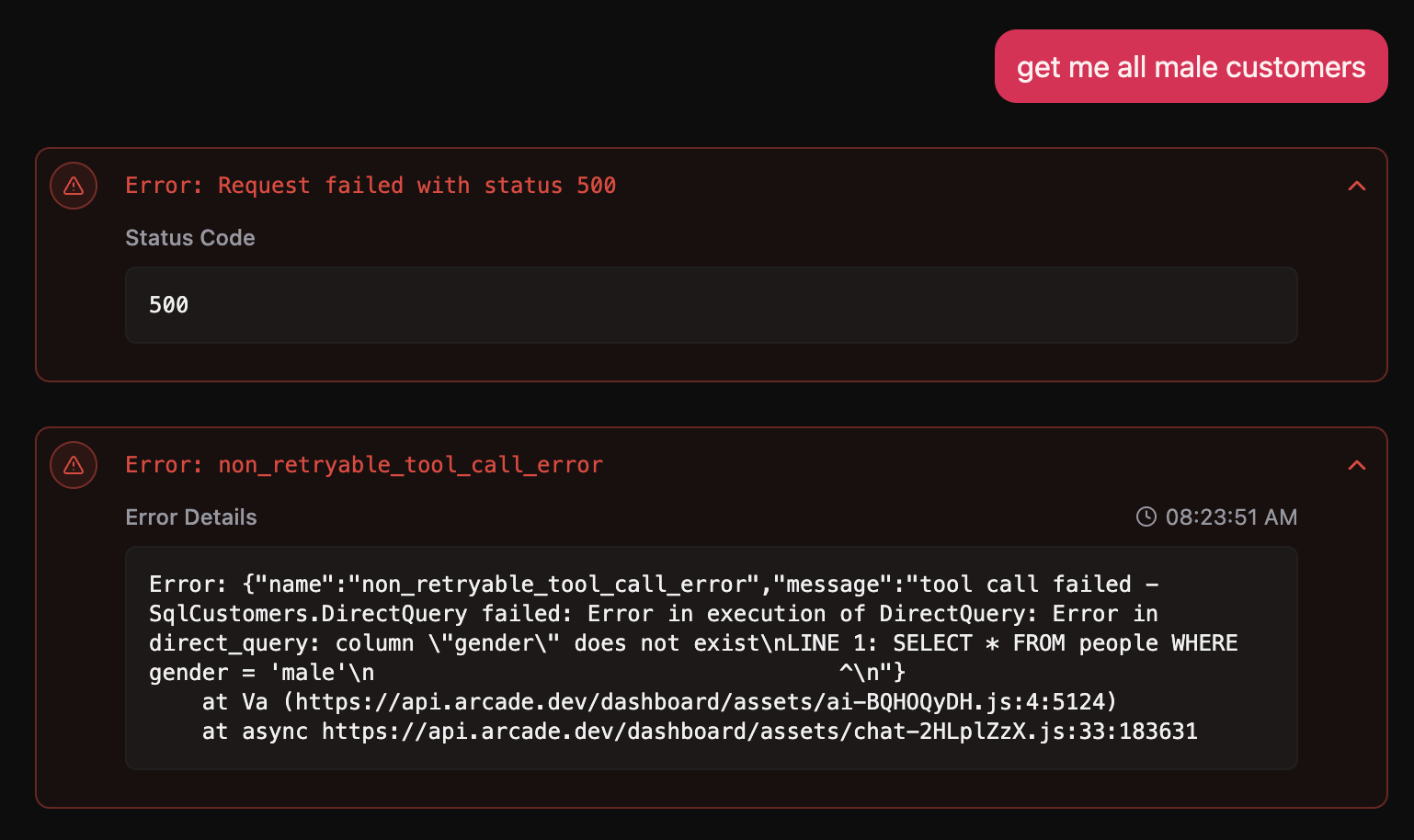

LLMs are prone to hallucinations. In this case, the model incorrectly assumed that "male" in the Occupation field or "Mr." in the customer's Name was a valid way to determine gender. However, based on our schema, it should have indicated that the necessary columns were missing to generate an accurate response. This kind of mistake can mislead users into believing that Mr. Phillips is the only male customer in the database, leading to flawed decision-making. An even more dangerous consequence of giving such freedom to the LLM is that if convinced, it might generate DELETE or DROP statements, or hallucinate queries so complex that they have a performance impact on the entire database. Or are simply using the wrong SQL dialect, or use non-existing columns:

We can mitigate this by giving the LLM a more robust, controlled interface to our database, with explicit parameters that are clear it can use. The strategy will be to offer a clear set of parameters that the LLM can use to filter the table. The tool will take care of sanitizing the input and building the query, ensuring the right dialect and preventing anything we don't want from hitting our database:

@tool(requires_secrets=["database_url"])

def query_customer_data(

context: ToolContext,

columns_to_select: Annotated[

Optional[list[str]],

"List of columns to select from the 'people' table."

" If None, all columns are selected.",

] = None,

filter_by_id: Annotated[

Optional[int],

"Filter the results by ID."

] = None,

filter_by_name: Annotated[

Optional[str],

"Filter the results by name."

] = None,

# ... more parameters in the repo!

order_by: Annotated[

Optional[str],

"Column to order the results by. Must be a valid column name."

] = None,

limit: Annotated[

int,

"The maximum number of rows to return."

] = 20,

) -> Annotated[

dict,

"The data returned from the database."]:

"""

Query the data from the 'people' table with the provided parameters.

"""

# Build the base query

valid_columns_list = ["id", "name", "age",

"location", "occupation", "email"]

valid_columns = "*"

if columns_to_select:

# Collect valid columns to select, ignore any others

valid_columns = [

col for col in columns_to_select if col in valid_columns_list]

query = f"SELECT {valid_columns} FROM people"

params = []

logger.debug(f"Initial query: {query}")

# Build WHERE clause with filters

where_clauses = []

if filter_by_id is not None:

where_clauses.append('id = %s')

params.append(filter_by_id)

logger.debug(f"Filtering by id: {filter_by_id}")

if filter_by_name is not None:

where_clauses.append('Name ILIKE %s')

params.append(f"%{filter_by_name}%")

logger.debug(f"Filtering by Name: {filter_by_name}")

# ... handle more parameters

# add the WHERE clause if needed

if where_clauses:

query += " WHERE " + " AND ".join(where_clauses)

logger.debug(f"Added WHERE clauses: {' AND '.join(where_clauses)}")

# Add ORDER BY clause if provided

if order_by:

if order_by not in valid_columns_list:

logger.error(f"Invalid order_by column: {order_by}")

return {"results": []}

query += f' ORDER BY "{order_by}"'

logger.debug(f"Ordering by: {order_by}")

# Add LIMIT clause

query += " LIMIT %s"

params.append(limit)

# Connect to the database

conn = get_database_connection(context)

cursor = conn.cursor()

# Execute the query and get the rows

cursor.execute(query, params)

rows = cursor.fetchall()

logger.info(f"Query executed successfully. Rows fetched: {len(rows)}")

# Prepare the results as a list of dictionaries

column_names = [description[0] for description in cursor.description]

results = [dict(zip(column_names, row)) for row in rows]

logger.debug(f"Results prepared: {results}")

conn.close()

return {"results": results}We shortened the code in the tutorial to avoid very long, verbose functions. See the full implementation on the repo for this tutorial: https://github.com/ArcadeAI/arcade-sql-agent-tutorial/blob/main/arcade_sql_customers/tools/query.py#L76

This is a great time to deploy and try again (If you don't remember how to do it, just scroll up 😉)

Build evals for tool-calls

Tests are a great way to validate that the function behaves predictably when passing different values. However, we should also test how well LLMs interact with our tools. Arcade provides us with a way to evaluate how well LLMs are using our tools by assessing:

- That the LLM picks the correct tool at the right time

- That it passes the correct parameters to the tool based on the context

- That it calls tools in the right sequence for multi-step tasks

Here we're providing a single tool to the LLM, so we're only testing the second aspect, but we'll use all of Arcade's evaluation mechanisms: Rubrics, Evaluation Suites, and Critics. Let's go ahead and replace the contents of sql_customers/evals/eval_sql_customers.py :

from arcade.sdk import ToolCatalog

from arcade.sdk.eval import (

EvalRubric,

EvalSuite,

ExpectedToolCall,

SimilarityCritic,

BinaryCritic,

tool_eval,

)

import arcade_sql_customers

from arcade_sql_customers.tools.query import query_customer_data

# Evaluation rubric

rubric = EvalRubric(

fail_threshold=0.85,

warn_threshold=0.95,

)

catalog = ToolCatalog()

catalog.add_module(arcade_sql_customers)

@tool_eval()

def sql_toolkit_query_customer_eval_suite() -> EvalSuite:

suite = EvalSuite(

name="sql_toolkit Tools Evaluation",

system_message=(

"You are an AI assistant with access to sql_toolkit tools. "

"Use them to help the user with their tasks."

),

catalog=catalog,

rubric=rubric,

)

# We'll add our evaluations here

return suiteLet's break down what's going on here:

- The

EvalRubricobject we're using instructs arcade to consider any evaluation score under 85% a failure, scores between 85% and 95% a warning, and anything above 95% as a success. - The

ToolCatalogobject collects the tools we want to make available to the LLM. - We use the

@tool_eval()decorator to tell Arcade that we want to use thesql_toolkit_query_customer_eval_suitefunction to define a suite of specific evaluations. - In the function, we initialize the suite with a system message, and we'll now add specific cases to the suite to test different scenarios using an LLM:

suite.add_case(

name="Getting names and emails from a given Name",

user_message="Get the names and emails of all customers named David",

expected_tool_calls=[ExpectedToolCall(

func=query_customer_data,

args={

"filter_by_name": "David",

"columns_to_select": ["name", "email"],

})],

rubric=rubric,

critics=[

SimilarityCritic(critic_field="filter_by_name", weight=0.5),

BinaryCritic(critic_field="columns_to_select", weight=0.5),

]

)See the full set of evaluation cases on the repo for this tutorial: https://github.com/ArcadeAI/arcade-sql-agent-tutorial/blob/main/evals/eval_sql_customers.py

Each case represents a prompt that a user may use to get the LLM to call our tool. The LLM should be able to parse the natural language included in user_message and populate the parameters of our tool with the correct parameters.

We use the ExpectedToolCall to define that query_customer_data is the correct function to call, as well as which values should be used in this scenario based on the context. For the first case, we want to assess that the LLM is able to correctly choose the values to pass to filter_by_name ( "David" ), and columns_to_select (["name", "email"]).

Since we're using ILIKE in our query builder, we don't really care that the value passed to filter_by_name parameter is not exactly the same as the one in our prompt ( "david" would work just as well as "DAVID" , "David" , or even "DaViD" ). In this scenario, we use a SimilarityCritic , which evaluates the similarity between the expected value we define in ExpectedToolCall and the actual value inferred by the LLM from the context.

However, we do care about the value passed to columns_to_select being correct ("E-mail" will not work to select the "email" column). In this scenario, we use the BinaryCritic, which checks that there is an exact match between the expected and actual values.

The weight parameters passed to the critics determine how relevant the score of the critic is to the overall evaluation case. The best practice and most intuitive setting for this is to make all the weights sum to 1.0 for each evaluation case, so it can easily be interpreted as a percent-based importance. You can read more about the types of critic and when to use them.

To evaluate this, we'll use the Arcade Engine, which already has access to our tools.

Install the toolkit locally:

make installAnd run the evaluation suite:

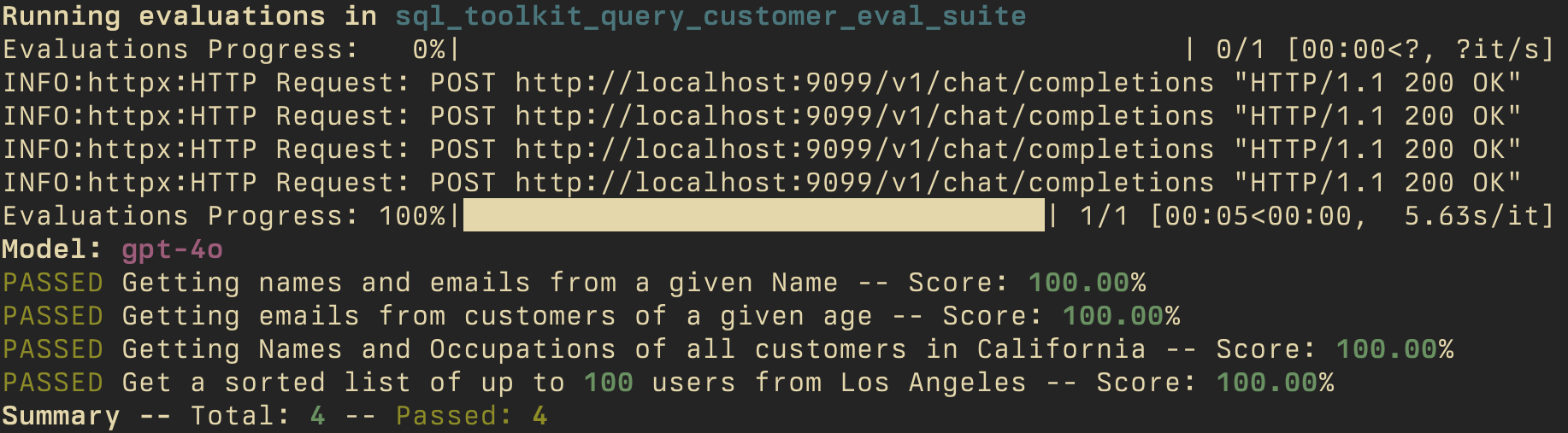

arcade evals --cloud evalsThis will connect to Arcade, and run each case on our evaluation suite. The output should look like this:

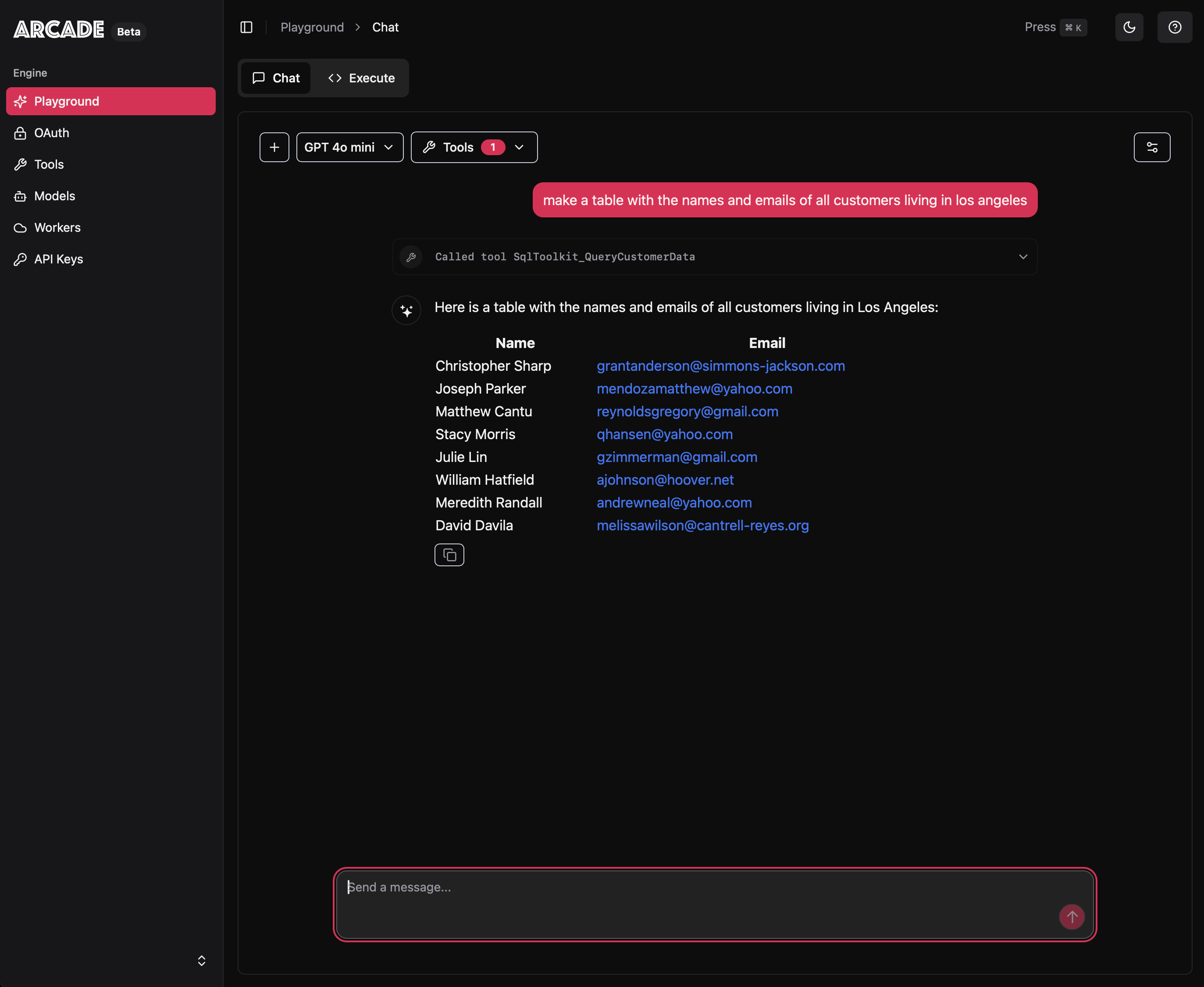

Invoke our new tool from the Playground

Congratulations! All evals have passed, and now we're ready to see how our tool interacts in an actual chat scenario. Login to the Arcade dashboard, and ask it to retrieve some customers

Now we can interact with our database using natural language!

Next Steps

Now that you've seen how to build an AI-powered database interface with Arcade, you can create your own custom solution:

- Customize the queries based on your team's needs

- Test thoroughly before deploying

The entire process takes about half an hour, and it opens up your customer data to everyone on your team—regardless of their technical background.

Ready to try it yourself? Visit arcade.dev to get started.